Google recently released a new image format called

WebP, which is based on the intra-frame compression from the WebM (VP8) video codec. I'd seen a few comparisons of WebP floating about, but I wasn't really happy with any of them. Google has their own analysis

which I critiqued here (executive summary: bad comparison metric--PSNR--and questionable methods).

Dark Shikari's comparison was limited by testing a single quality setting and a single image. There's also

this comparison and

this comparison, but neither of them are that rigorous. The first is, again, a single image at one arbitrary compression level, although it does clearly show WebP producing superior results. The second is at least multiple images, but the methods are a little fuzzy and there's no variation in compression level. It's also a bloodbath (WebP wins), so I'm a bit wary of its conclusions.

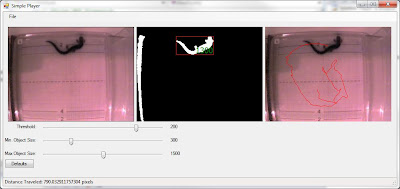

So, I coded up a quick and dirty app to compress an image to WebP, convert it back to PNG and then compute the

MS-SSIM metric between the original image and the final PNG. I used

Chris Lomont's MS-SSIM implementation. I also did the same process for JPEG, using the built-in JPEG compression in .NET. Then, for the hell of it, I decided it'd also be fun to add JPEG XR into the mix, since it's probably the only other format that has a snow-cone's chance in hell of become viable at some future date (possibly when IE9 hits the market). I eventually got JPEG XR working, although it was a pain in the butt due to a horrible API (see below).

The input image is encoded for every possible quality setting, so the final output is a group of SSIM scores and file sizes. I also computed db for the SSIM scores the same way x264 computes it, since SSIM output is not linear (i.e. 0.99 is about twice as good as 0.98, not 1% better):

SSIM_DB = -10.0 * log10(1.0 - SSIM)

For starters, I decided to test on

the image Dark Shikari used to test with, since it's a clean source and I wanted to see if I could replicate his findings. Here's the results of the parkrun test:

Quick explanation: higher SSIM (dB) is better, smaller file size is better. So in the perfect world, you'd have codecs outputting in the upper left-corner of the graph. But when images are heavily compressed the quality drops, so you end up with graphs like the above: high compression equals bad quality, low compression equals large file size.

In parkrun, WebP seems to perform decently at very low bitrates, but around ~15 dB JPEG starts to outperform it. Even at low bitrates, it never has a substantial advantage over JPEG. This does seem to confirm Dark Shikari's findings: WebP really doesn't perform well here. When I examined the actual output, it also followed this trend quite clearly. JPEG XR performs so poorly that it is almost not worth mention.

Next, I did a test on an existing JPEG image because I wanted to see the effect of recompressing JPEG artifacting. In

Google's WebP analysis, they do this very thing, and I had qualms about JPEG's block-splitting algorithm being fed into itself repeatedly. Here's the image I used:

Results:

Again, absolutely dismal JPEG XR performance. Notice the weirdness in the JPEG line--I believe this is due to recompressing JPEG. I got similarly odd results when I used other JPEG source material, which does support my hypothesis that recycling JPEG compressed material in a comparison will skew the results due to JPEG's block splitting algorithm. I'll try to post a few more with JPEG source material if I can.

As far as performance goes, WebP does a little better here--it's fairly competitive to about ~23 dB, at which point JPEG overtakes it. At lower bitrates it is resoundingly better than JPEG. In fact, it musters some halfway decent looking files around ~15 dB/34 KB (

example), while JPEG at that same file size looks horrible (

example). However, to really do a good job matching the original, including the grainy artifacts, JPEG eventually outperforms WebP. So for this test, I think the "winner" is really decided by what your needs are. If you want small and legible, WebP is preferable. If you're looking for small(ish) and higher fidelity, JPEG is a much better choice.

Next test is an anime image:

Results:

WebP completely dominates this particular comparison. If you've ever wondered what "13 dB better" looks like, compare

JPEG output to

WebP output at ~17 KB file sizes. JPEG doesn't reach a comparable quality to the ~17 KB WebP file until it's around ~34 KB in size. By the time JPEG surpasses WebP around 26 dB, the extra bits are largely irrelevant to overall quality. JPEG XR unsurprisingly fails.

Since a lot of web graphics aren't still-captures from movies, I decided a fun test would be re-encoding the Google logo, which is a 25 KB PNG consisting mostly of white space:

This is a better graphic for PNG compression, but I wanted to see what I could get with lossy compression. Results:

WebP clearly dominates, which confirms another hypothesis: WebP is much better at dealing with gradients and low-detail portions than JPEG. JPEG XR is, again, comically bad.

Incidentally, Google could net some decent savings by using WebP to compress their logo (even at 30 dB, which is damn good looking, it's less than 10 KB). And I think this may be a place WebP could really shine: boring, tiny web graphics where photographic fidelity is less of a concern than minimizing file size. Typically PNG does a good job with these sorts of graphics, but it's pretty clear there are gains to be had by using WebP.

My conclusions after all this:

- JPEG XR performs so poorly that my only possible explanation is I must be using the API incorrectly. Let's hope this is the case; otherwise, it's an embarrassingly bad result for Microsoft.

- WebP is consistently better looking at low bitrates than JPEG, even when I visually inspect the results.

- WebP does particularly well with smooth gradients and low-detail areas, whereas JPEG tends to visually suffer with harsh banding and blocking artifacts.

- WebP tends to underperform on very detailed images that lack gradients and low-detail areas, like the parkrun sample.

- JPEG tends to surpass the quality of WebP at medium to high bitrates; if/where this threshold occurs largely depends on the image itself.

- WebP, in general, is okay, but I don't feel like the improvements are enough. I'd expect a next-gen format to outperform JPEG across the board--not just at very low compression levels or in specific images.

A few more asides:

- I'd like to test with a broader spectrum of images. I also need better source material that has not been tainted by any compression.

- No windows binary for WebP....seriously? Does Google really expect widespread adoption while snubbing 90% of non-nerd world? I worked around this by using a codeplex project that ported the WebP command line tools to Windows. I like Linux, but seriously--this needs to be fixed if they want market penetration.

- If you ask the WebP encoder to go too low, it tends to crash. Well...maybe. The aforementioned codeplex project blows up, but I'm assuming it's due to some error in the underlying WebP encoder. That said, by the time it blows up, it's generating images so ugly that it's no longer relevant.

- JPEG/JPEG-XR go so low that your images look like bad expressionist art. It's a little silly.

- I absolutely loathe the new classes in .NET required to work with JPEG XR. It is a complete disaster, to put it lightly, especially if all your code is using System.Drawing.Bitmap, and suddenly it all has to get converted to System.Windows.Media.Imaging.BitmapFrame. Why, MSFT, why? If you want this format to succeed, stop screwing around and make it work with System.Drawing.Bitmap like all the other classes already do.

- WmpBitmapEncoder.QualityLevel appears to do absolutely nothing, so I ended up using ImageQualityLevel, which is a floating point type. Annoying, not sure why QualityLevel doesn't work.

- The JPEG XR API leaks memory like nuts when used heavily from .NET; not even GC.Collect() statements reclaim the memory. Not sure why, didn't dwell on it too much given that JPEG XR seems to not be worth anyone's time.

- People who think H.264 intra-frame encoding is a viable image format forget it's licensed by MPEG-LA. So while it would be unsurprising if x264 did a better job with still image compression than WebP and JPEG, this is ignoring the serious legal wrangling that still needs to occur. This is not to say WebM/WebP are without legal considerations (MPEG-LA could certainly attempt to put together a patent pool for either), but at least it's within the realm of reason.

Let me know if you're interested in my code and I'll post it. It's crude (very "git'er done"), but I'm more than happy to share.

EDIT: Just to make sure .NET's JPEG encoder didn't blow, I also tried a few sanity checks using

libjpeg instead. I also manually tested in GIMP on Ubuntu. The results are almost identical. If anyone knows of a "good" JPEG encoder that can outdo the anime samples I posted, please let me know of the encoder and post a screenshot (of course, make sure your screenshot is ~17 KB in size).

(and literal in more ways than one, since your memory is "trashed" hahahaha, oh that was bad...)

(and literal in more ways than one, since your memory is "trashed" hahahaha, oh that was bad...)